アンドロイドでOpenCV(色検出)

アンドロイドでOpenCVを使ってみる..の第二弾。

「色検出」です。

ネット上で面白いコードを見つけたので、早速やってみました。

元ネタはこちら

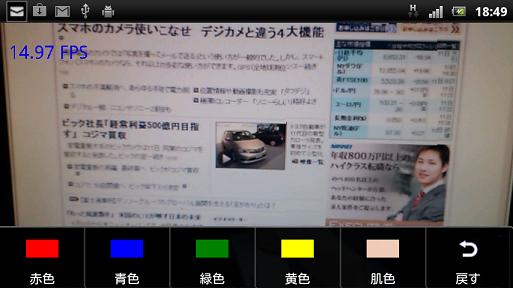

まずは、赤色と肌色の2種類。

色検出のサンプルコード

New

検出する色の数を増やしてみました。

結果はこんな感じ。

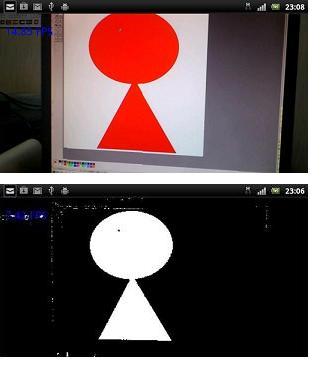

例えば、赤色を検出してみましょう。

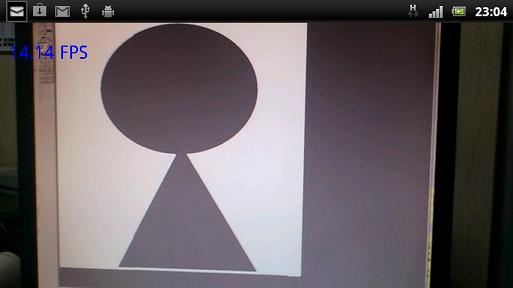

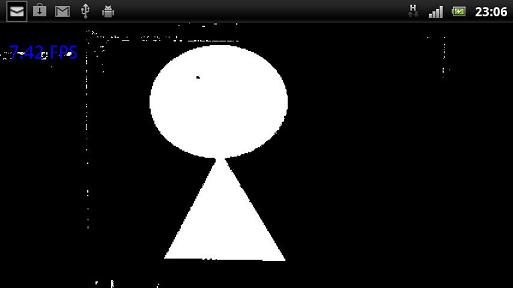

ディスプレイにこんな画面を表示しておきます。元画像です。

メニュの「赤色」をタップすると検出を開始します。

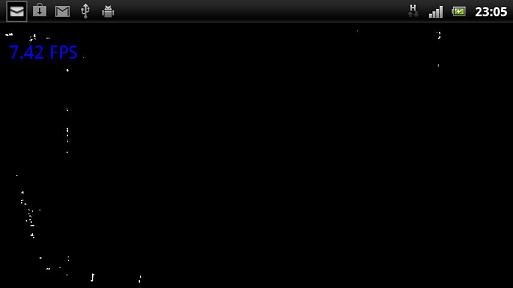

赤色が無くて何も検出されない場合はこんな画面です。

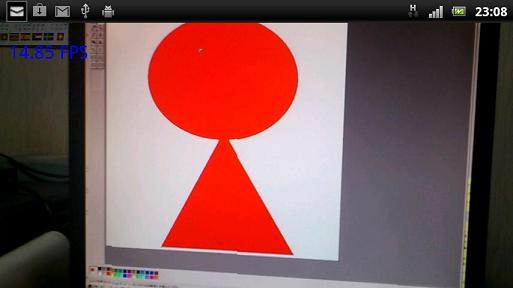

色を変えると、こんな感じで検出されます。

色を変えた画像です。

肌色は、各自でやってみてください。人の顔なんかを検出すると、かなり「ホラー」な雰囲気でビビリます。

夜中に一人でやるのは避けましょう。

コードは、「顔検出」で使ったベースコードの使い回しです(^^)。

不要なコードも混じってますが、まあ今後なんかの役に立つやもしれんので、残しておきます。

----------------------------------------------

【FdActivity.java】

package com.wisteria.detection.cd; import android.app.Activity; import android.os.Bundle; import android.util.Log; import android.view.Menu; import android.view.MenuItem; import android.view.Window; public class FdActivity extends Activity { private static final String TAG = "Sample::Activity"; private MenuItem mItemRed; private MenuItem mItemBlue; private MenuItem mItemGreen; private MenuItem mItemYellow; private MenuItem mItemSkin; private MenuItem mItemRelease; //public static float minFaceSize = 0.5f; //add private FdView fdv; public FdActivity() { Log.i(TAG, "Instantiated new " + this.getClass()); } /** Called when the activity is first created. */ @Override public void onCreate(Bundle savedInstanceState) { Log.i(TAG, "onCreate"); super.onCreate(savedInstanceState); requestWindowFeature(Window.FEATURE_NO_TITLE); //modify fdv = new FdView(this); setContentView(fdv); //setContentView(new FdView(this)); } @Override public boolean onCreateOptionsMenu(Menu menu) { Log.i(TAG, "onCreateOptionsMenu"); mItemRed = menu.add("赤色"); mItemBlue = menu.add("青色"); mItemGreen = menu.add("緑色"); mItemYellow = menu.add("黄色"); mItemSkin = menu.add("肌色"); mItemRelease = menu.add("戻す"); mItemRed.setIcon(R.drawable.ic_menu_red); mItemBlue.setIcon(R.drawable.ic_menu_blue); mItemGreen.setIcon(R.drawable.ic_menu_green); mItemYellow.setIcon(R.drawable.ic_menu_yellow); mItemSkin.setIcon(R.drawable.ic_menu_skin); mItemRelease.setIcon(R.drawable.ic_menu_ret); return true; } @Override public boolean onOptionsItemSelected(MenuItem item) { Log.i(TAG, "Menu Item selected " + item); if (item == mItemRed) fdv.set_flag("red",true); else if (item == mItemBlue) fdv.set_flag("blue",true); else if (item == mItemGreen) fdv.set_flag("green",true); else if (item == mItemYellow) fdv.set_flag("yellow",true); else if (item == mItemSkin) fdv.set_flag("skin",true); else if (item == mItemRelease) fdv.set_flag("",false); return true; } }----------------------------------------------

【FdView.java】

package com.wisteria.detection.cd; import java.io.File; import java.io.FileOutputStream; import java.io.IOException; import java.io.InputStream; import java.util.LinkedList; import java.util.List; import org.opencv.android.Utils; import org.opencv.core.Core; import org.opencv.core.Mat; import org.opencv.core.Rect; import org.opencv.core.Scalar; import org.opencv.core.Size; import org.opencv.highgui.Highgui; import org.opencv.highgui.VideoCapture; import org.opencv.objdetect.CascadeClassifier; import android.content.Context; import android.graphics.Bitmap; import android.util.Log; import android.view.SurfaceHolder; import android.util.Log; //add import android.view.MotionEvent; import android.os.Environment; import android.graphics.Bitmap.CompressFormat; import org.opencv.core.Point; import org.opencv.imgproc.Imgproc; public class FdView extends SampleCvViewBase { private static final String TAG = "Sample::FdView"; private Mat mRgba; private Mat mGray; private Mat mHSV2; private Mat mHSV; private Mat mRgba2; private CascadeClassifier mCascade; //add private String RectCoordinate = ""; private boolean c_flag = false; private String c_type = ""; //add private int tl_x = 0; private int tl_y = 0; private int br_x = 0; private int br_y = 0; private int prev_tl_x = 0; private int prev_tl_y = 0; private int prev_br_x = 0; private int prev_br_y = 0; public FdView(Context context) { super(context); try { InputStream is = context.getResources().openRawResource(R.raw.lbpcascade_frontalface); File cascadeDir = context.getDir("cascade", Context.MODE_PRIVATE); File cascadeFile = new File(cascadeDir, "lbpcascade_frontalface.xml"); FileOutputStream os = new FileOutputStream(cascadeFile); byte[] buffer = new byte[4096]; int bytesRead; while ((bytesRead = is.read(buffer)) != -1) { os.write(buffer, 0, bytesRead); } is.close(); os.close(); mCascade = new CascadeClassifier(cascadeFile.getAbsolutePath()); if (mCascade.empty()) { Log.e(TAG, "Failed to load cascade classifier"); mCascade = null; } else Log.i(TAG, "Loaded cascade classifier from " + cascadeFile.getAbsolutePath()); cascadeFile.delete(); cascadeDir.delete(); } catch (IOException e) { e.printStackTrace(); Log.e(TAG, "Failed to load cascade. Exception thrown: " + e); } } @Override public void surfaceChanged(SurfaceHolder _holder, int format, int width, int height) { super.surfaceChanged(_holder, format, width, height); synchronized (this) { // initialize Mats before usage mGray = new Mat(); mRgba = new Mat(); mRgba2 = new Mat(); mHSV = new Mat(); mHSV2 = new Mat(); } } @Override protected Bitmap processFrame(VideoCapture capture) { if (c_flag == true) { capture.retrieve(mRgba, Highgui.CV_CAP_ANDROID_COLOR_FRAME_BGRA); Imgproc.cvtColor(mRgba, mHSV, Imgproc.COLOR_BGR2HSV,3); if (c_type == "red") { Core.inRange(mHSV, new Scalar(0, 100, 30), new Scalar(5, 255, 255), mHSV2);//赤色 } else if (c_type == "blue") { Core.inRange(mHSV, new Scalar(90, 50, 50), new Scalar(125, 255, 255), mHSV2);//青色 } else if (c_type == "green") { Core.inRange(mHSV, new Scalar(50, 50, 50), new Scalar(80, 255, 255), mHSV2);//緑色 } else if (c_type == "yellow") { Core.inRange(mHSV, new Scalar(20, 50, 50), new Scalar(40, 255, 255), mHSV2);//黄色 } else if (c_type == "skin") { Core.inRange(mHSV, new Scalar(0, 38, 89), new Scalar(20, 192, 243), mHSV2);//肌色 } Imgproc.cvtColor(mHSV2, mRgba, Imgproc.COLOR_GRAY2BGR, 0); Imgproc.cvtColor(mRgba, mRgba2, Imgproc.COLOR_BGR2RGBA, 0); Bitmap bmp = Bitmap.createBitmap(mRgba2.cols(), mRgba2.rows(), Bitmap.Config.ARGB_8888); if (Utils.matToBitmap(mRgba2, bmp)) return bmp; bmp.recycle(); return null; } else { capture.retrieve(mRgba, Highgui.CV_CAP_ANDROID_COLOR_FRAME_RGBA); Bitmap bmp = Bitmap.createBitmap(mRgba.cols(), mRgba.rows(), Bitmap.Config.ARGB_8888); if (Utils.matToBitmap(mRgba, bmp)){ return bmp; } bmp.recycle(); return null; } } public boolean onTouchEvent(MotionEvent event) { if (event.getAction() == MotionEvent.ACTION_DOWN) { //get_cvPicture(); } return true; } public void set_flag(String c,boolean f) { // c_type = c; c_flag = f; } public void get_cvPicture() { Bitmap bmp = null; synchronized (this) { if (mCamera.grab()) { bmp = processFrame(mCamera); } } if (bmp == null) { return; } else { bmp.recycle(); } } //--------------------------------------- @Override public void run() { super.run(); synchronized (this) { // Explicitly deallocate Mats if (mRgba != null) mRgba.release(); if (mGray != null) mGray.release(); if (mRgba2 != null) mRgba2.release(); if (mHSV != null) mHSV.release(); if (mHSV2 != null) mHSV2.release(); mRgba = null; mGray = null; mRgba2 = null; mHSV = null; mHSV2 = null; } } }----------------------------------------------

【SampleCvViewBase.java】

package com.wisteria.detection.cd; import java.util.List; import org.opencv.core.Size; import org.opencv.highgui.VideoCapture; import org.opencv.highgui.Highgui; import android.content.Context; import android.graphics.Bitmap; import android.graphics.Canvas; import android.util.Log; import android.view.SurfaceHolder; import android.view.SurfaceView; public abstract class SampleCvViewBase extends SurfaceView implements SurfaceHolder.Callback, Runnable { private static final String TAG = "Sample::SurfaceView"; private SurfaceHolder mHolder; public VideoCapture mCamera;//modify private FpsMeter mFps; public SampleCvViewBase(Context context) { super(context); mHolder = getHolder(); mHolder.addCallback(this); mFps = new FpsMeter(); Log.i(TAG, "Instantiated new " + this.getClass()); } public void surfaceChanged(SurfaceHolder _holder, int format, int width, int height) { Log.i(TAG, "surfaceCreated"); synchronized (this) { if (mCamera != null && mCamera.isOpened()) { Log.i(TAG, "before mCamera.getSupportedPreviewSizes()"); List<Size> sizes = mCamera.getSupportedPreviewSizes(); Log.i(TAG, "after mCamera.getSupportedPreviewSizes()"); int mFrameWidth = width; int mFrameHeight = height; // selecting optimal camera preview size { double minDiff = Double.MAX_VALUE; for (Size size : sizes) { if (Math.abs(size.height - height) < minDiff) { mFrameWidth = (int) size.width; mFrameHeight = (int) size.height; minDiff = Math.abs(size.height - height); } } } mCamera.set(Highgui.CV_CAP_PROP_FRAME_WIDTH, mFrameWidth); mCamera.set(Highgui.CV_CAP_PROP_FRAME_HEIGHT, mFrameHeight); } } } public void surfaceCreated(SurfaceHolder holder) { Log.i(TAG, "surfaceCreated"); mCamera = new VideoCapture(Highgui.CV_CAP_ANDROID); if (mCamera.isOpened()) { (new Thread(this)).start(); } else { mCamera.release(); mCamera = null; Log.e(TAG, "Failed to open native camera"); } } public void surfaceDestroyed(SurfaceHolder holder) { Log.i(TAG, "surfaceDestroyed"); if (mCamera != null) { synchronized (this) { mCamera.release(); mCamera = null; } } } protected abstract Bitmap processFrame(VideoCapture capture); public void run() { Log.i(TAG, "Starting processing thread"); mFps.init(); while (true) { Bitmap bmp = null; synchronized (this) { if (mCamera == null) break; if (!mCamera.grab()) { Log.e(TAG, "mCamera.grab() failed"); break; } bmp = processFrame(mCamera); mFps.measure(); } if (bmp != null) { Canvas canvas = mHolder.lockCanvas(); if (canvas != null) { canvas.drawBitmap(bmp, (canvas.getWidth() - bmp.getWidth()) / 2, (canvas.getHeight() - bmp.getHeight()) / 2, null); mFps.draw(canvas, (canvas.getWidth() - bmp.getWidth()) / 2, 0); mHolder.unlockCanvasAndPost(canvas); } bmp.recycle(); } } Log.i(TAG, "Finishing processing thread"); } }

TOP

付録

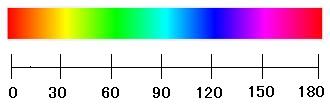

Core.inRangeで使われるScalarの引数は、H,S,V値です。

で、H(色相)はAndroidでは、0~180の範囲です。

TOP

他のOpenCV関連ページ

OpenCV覚書

矩形領域の座標を取得するページ

OpenCV 2.3.1でカスケードを作って、Androidで使ってみる

アンドロイドでOpenCV(お顔の検出)

アンドロイドでOpenCV(特徴点検出)

Android OpenCV 2.3.1で画像認識

AndroidでOpenCV 2.4.6を使ってみる

OpenCV + NyMMDで初音ミクさんにご挨拶してもらいます

Android OpenCV 2.4.6 の顔検出アプリを一から作ってみる